Running High Scale Low Latency Database with Zero Data In Memory?

I was talking to one of my oldest database colleagues (and a very dear friend of mine). We were chatting about how key/value stores and databases are evolving. My friend mentioned how they always seem to be revolving around in-memory solutions and cache. The main rant was how this kind of thing doesn’t scale well, while being expensive and complicated to maintain.

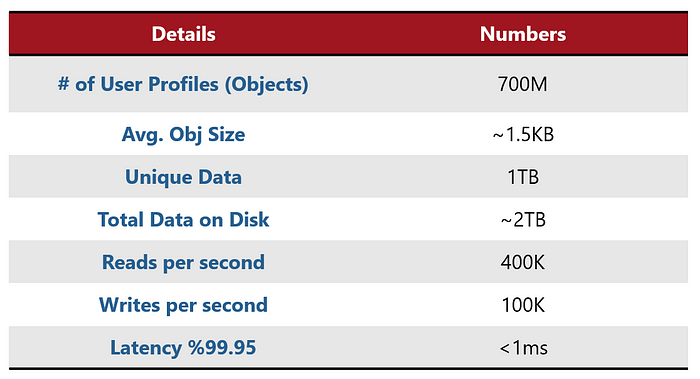

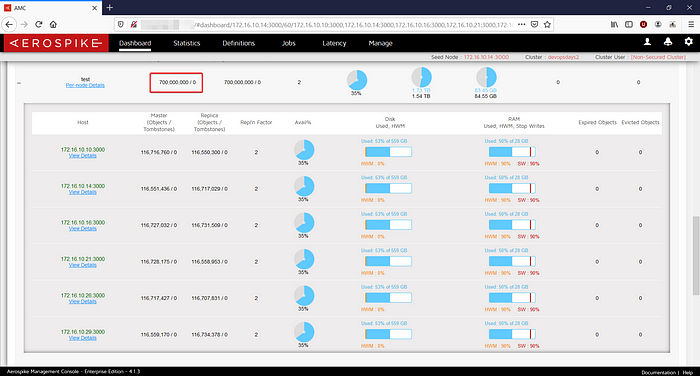

My friend’s background story was that they are running an application that uses a user profile with almost 700 million profiles (their total size was around 2TB, with a replication factor of 2). Since the access to the user profile is very random. In short terms, it means, the application is not able to “guess” which user it would need next as that is pretty much random. Therefore, they could not use pre-heating of the data to memory. Their main issue was that every now and then they were getting high peaks of over 500k operations per second of this kind of mixed workloads and that didn’t scale very well.

User Profile use case summary

In my friend mind’s eye, the only things they could do is use some kind of a memory based solution. They could either use an in-memory store — which, as we said before, doesn’t scale well and is hard to maintain, or use a traditional cache-first solution, but lose some of the low latency required, because most of the records are not cached.

I explained that Aerospike is different. In Aerospike we can store their 700 million profiles, 2 TB of data, provide said 500k TPS (400k reads and 100k writes, concurrently) with a sub 1ms latency, but without storing any of the data in memory. The memory usage would then be very minimal — under 5 percent of the data for that use case.

My friend was suspicious: “ What kind of wizardry are you pulling here?!”

So since I am not a wizard (yet, I am still convinced my Hogwarts acceptance letter is on its way — I’m almost sure it’s just the owl got delayed), I went ahead and created a modest demo cluster for them, just to show my “magic”.

Aerospike Cluster: Hybrid Memory Architecture

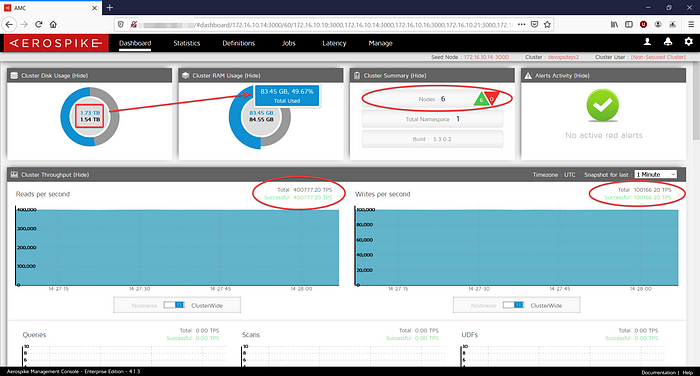

In this next screenshot, we can see the result: a 6 node cluster, running 500k TPS: 400k reads + 100k writes, storing 1.73TB of data but only utilizing 83.45GB of RAM.

Running a 6 node cluster: 1.73TB of data, 84 GB of RAM

This cluster doesn’t have a specialized hardware of any kind. It’s using 6 nodes of AWS’ c5ad.4xl (a standard option for a node), which means a total of 192GB RAM and 3.5TB of ephemeral devices, cluster-wide. From the pricing perspective it’s less than 2000$/month. That’s way less than what they pay now (and I price-tagged it before any discounts).

Obviously, if the cluster has a total of 192GB of DRAM, the data is not stored fully in memory. In this case, the application fetches exactly 0 percent of the data from any sort of cache. So, for the 1.73TB of data, the memory usage is under 84GB. I would note that the operating system (Linux Kernel) is able to store some the blocks in the page read cache if needed. That is not even part of the database optimization – this is an OS level thing! This would make things even faster when using access patterns like Common Records, or Read After Write.

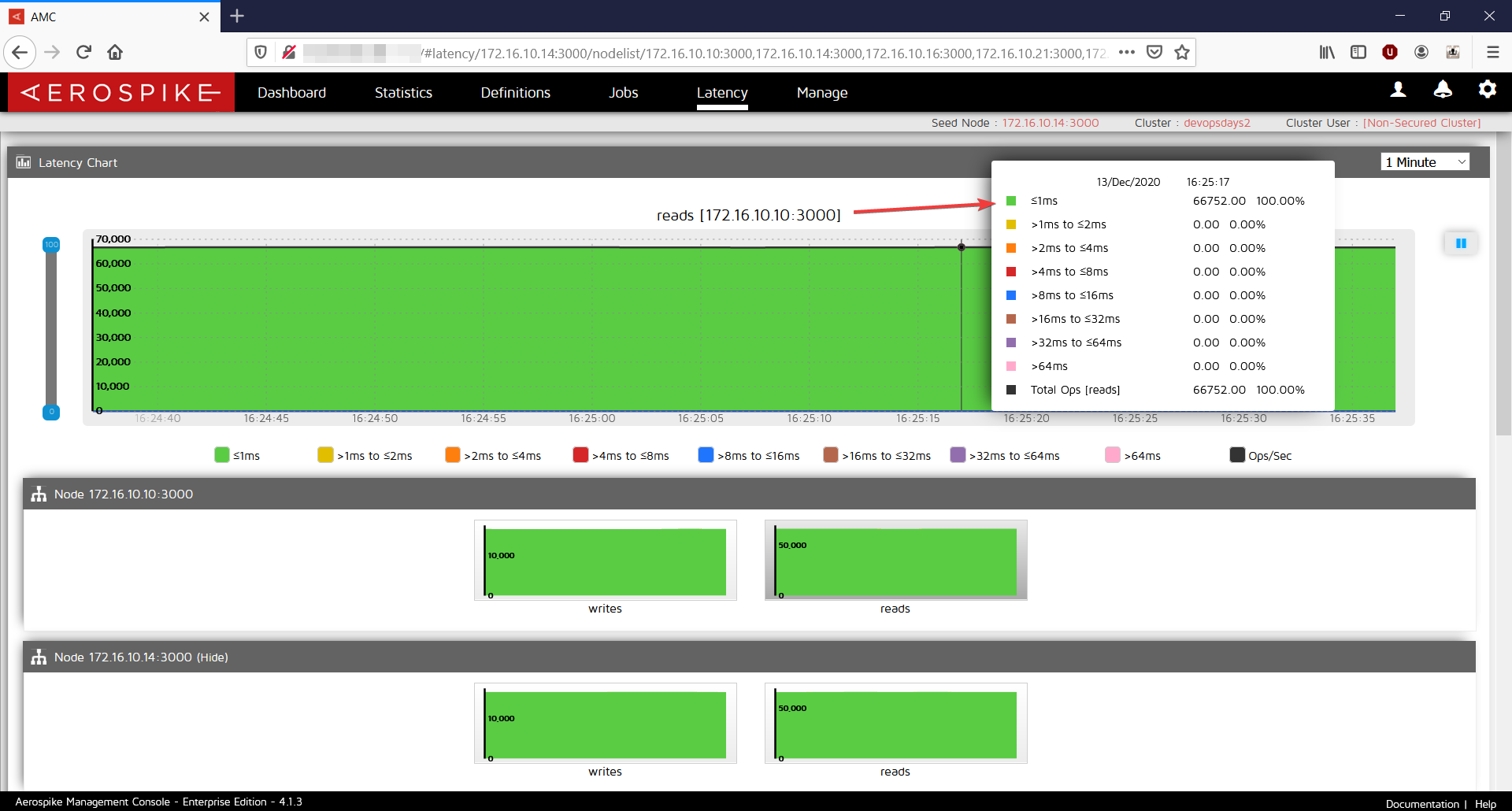

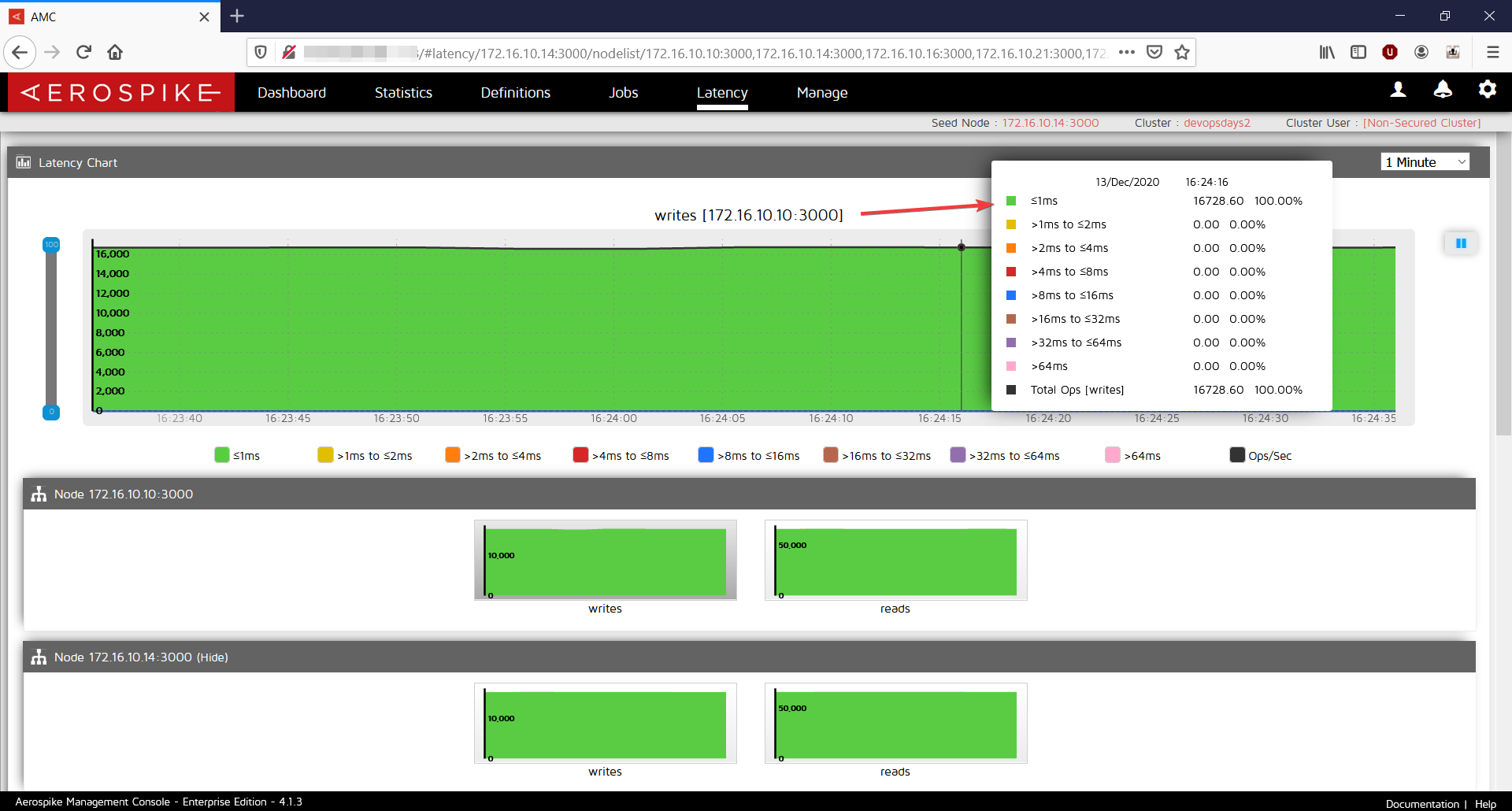

The cool thing is the performance. Predictable performance is something every application need — and for the peaks I described earlier, we can see in the next screenshots a latency of under 1ms for both reads and writes!

Reads latency, on a mixed load, sub 1ms

Write latency, on a mixed load, sub 1ms

So, this is not trickery and not a magic show, it’s just that Aerospike is different.

It is utilizing a variety of registered flash optimization patents and a special architecture called “Hybrid Memory Architecture”. This mean the data is not expected to be stored in memory — but rather it is stored on a flash device (SSD, NVMe, or Intel’s Persistent Memory) and the memory is only used for storing the primary index (pointers) to where the data is actually is on disk. The data is evenly distributed between the nodes, so scaling up (or down) is super easy.

Data is evenly distributed, easy to scale

Leave a Reply

Want to join the discussion?Feel free to contribute!